I am not going to Montreal

I am not presenting my work at the CIGI-QUALITA 2019 conference, in Montreal. This is because of a really soon to come newborn. The associate paper can be found here. This paper is a teaser of my onwriting PhD. I show that a simple webcam can be use with Deep Learning to detect complex defect patterns on injection molded parts.

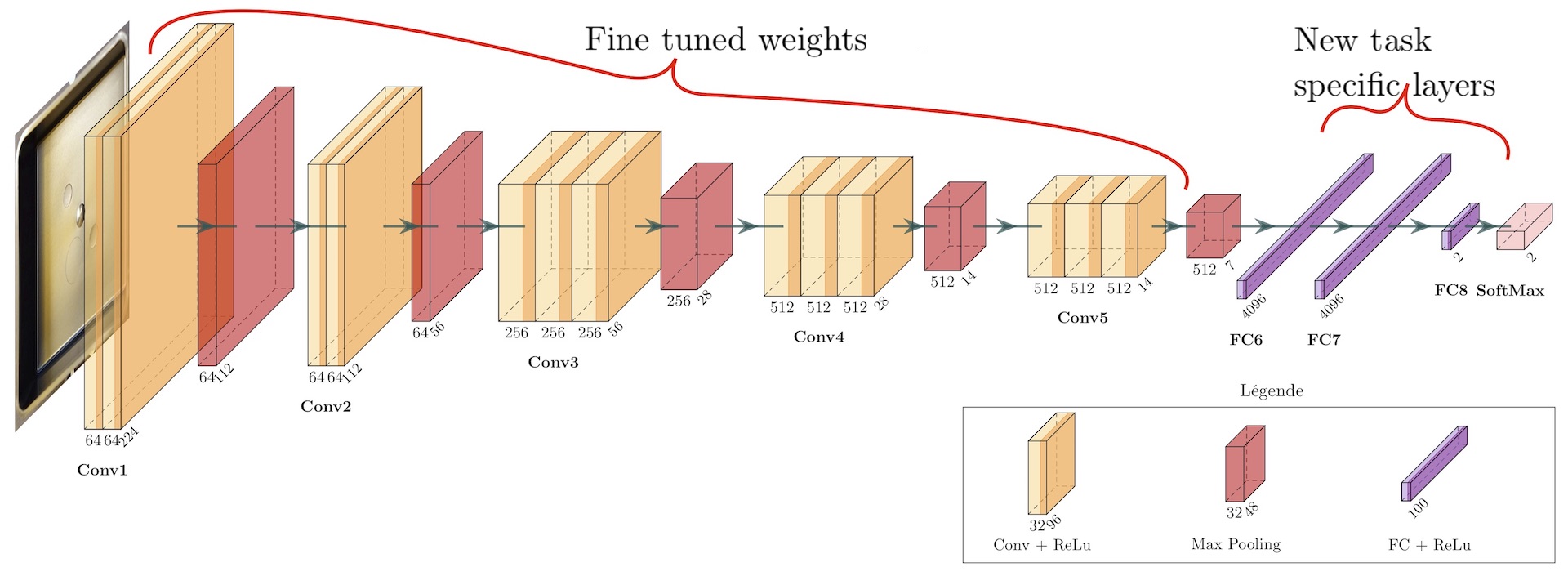

The software architecture is a REST API endpoint, with an GPU powered inference server and a client system which integrates hardware sensors. When the production line start, I have zero training data. Then it grows slowly, as the human expert annotate some parts. This is why transfer learning is necessary. I tried many different architecture, and the most robust one is VGG16.

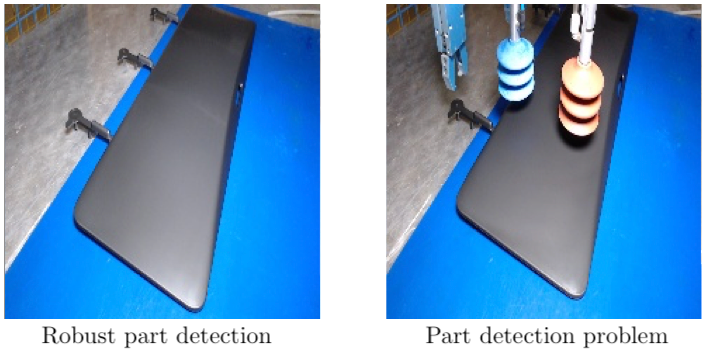

The embedded system runs on a low power ARM. It is responsible for the robust part detection. Fusion of multiple sensors was needed for a robust detection: sonar, Time Of Flight and camera frame analysis.

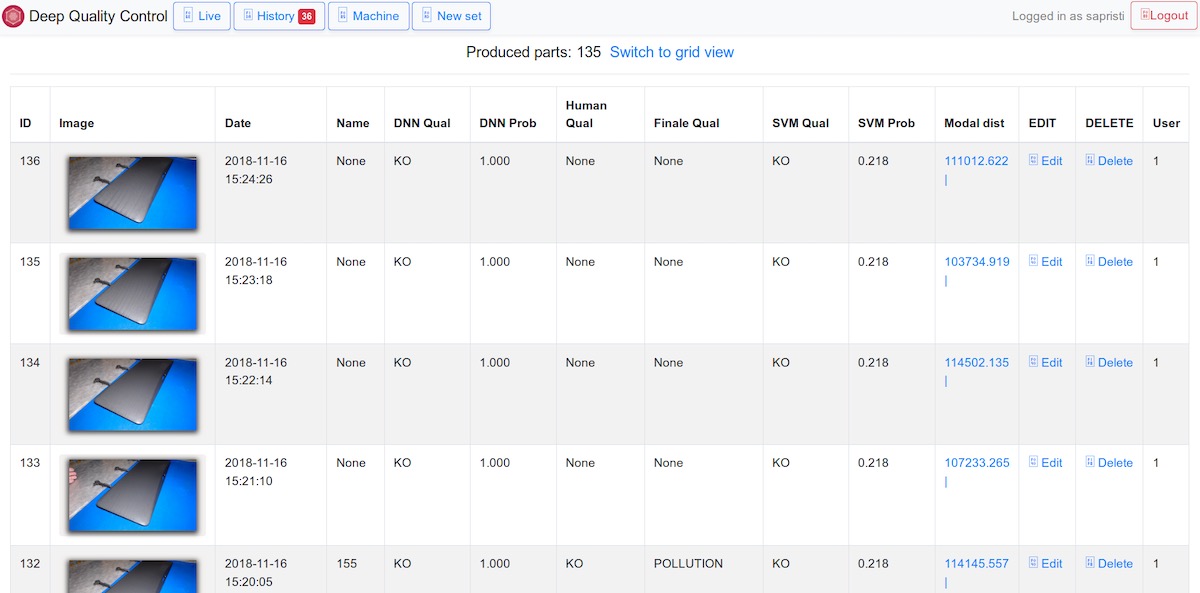

Finally, I designed a simple Flask UI, so the user can train the Deep Learning model as the production line goes. Every ten new annotate parts, the neural netorks model is re-trained.

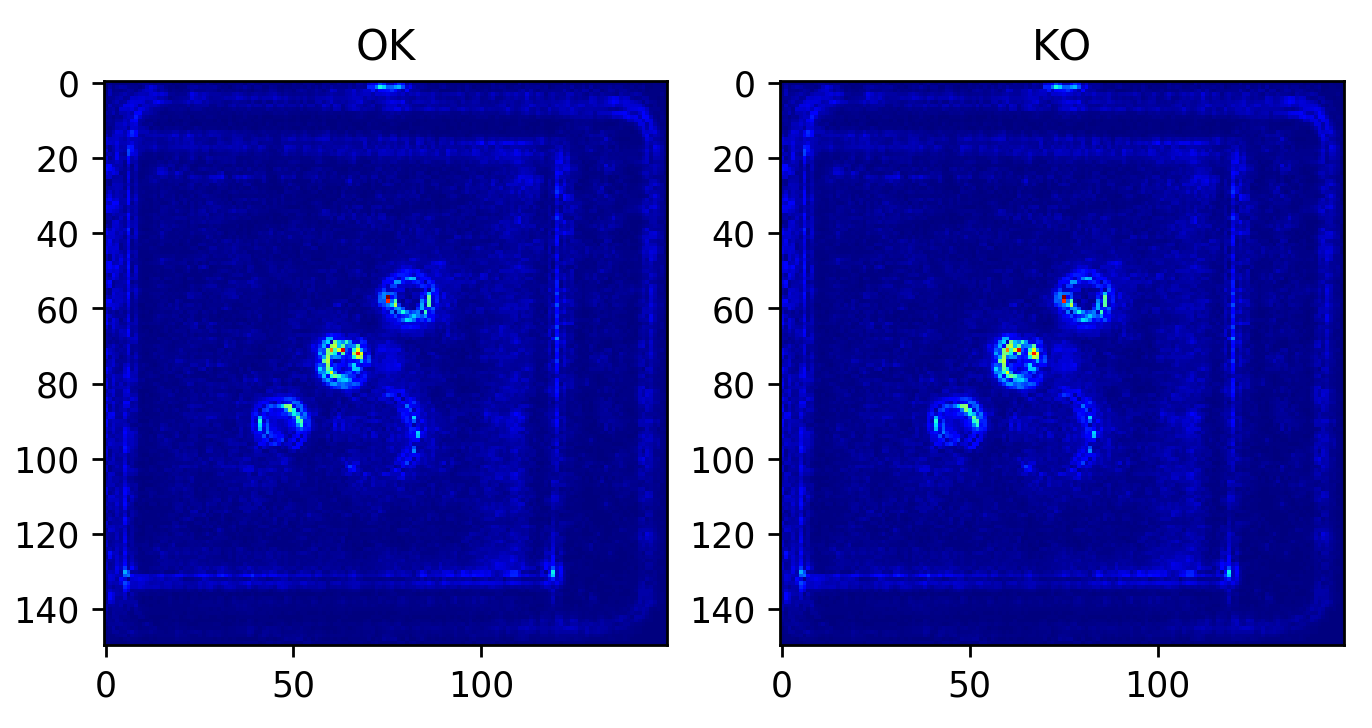

To explain the view of the neural networks, guided backpropagation is used. The saliency image shows that the model is sensible to the part geometry and to defects.

I am actually doing industrial scale trials of the system at IPC Technical Center and Plastic Omnium.